I am Luke E. Babarinde. This is my journey of doing more with a pair of hands on the computer keyboard at the interception of Machine Learning, Neural Networks, and Automation. The journey to continuously transform and innovate. It is a personal quest to truly become master of 'Mixture of Experts' (MoE Explained - https://huggingface.co/blog/moe). I have always had the desire to embank on digital projects but I always hit a stumping block because like everyone else, I cannot be the 'Master of All Trades'. Yes, I am decisively a jack of many trades, master of some, but medicore at way more. For instance, it is challenging to be an expert full stack engineer, be great at Machine and Deep Learning, and understand the deeper mechanics of data structures. These are fields that excite my curiosity but near impossible to be an expert at all of them.

That said, my commercial exposure across the fundamentals in computing spans software engineering (DevOps and automation), application and data security, anti-reverse engineering, and consulting. The foundational knowledge acquired in each of those domains has not only allowed me to thrive in an ever changing world, but importantly, it enables me to continue to strife for what is possible and remain excited for for the world of tomorrow.

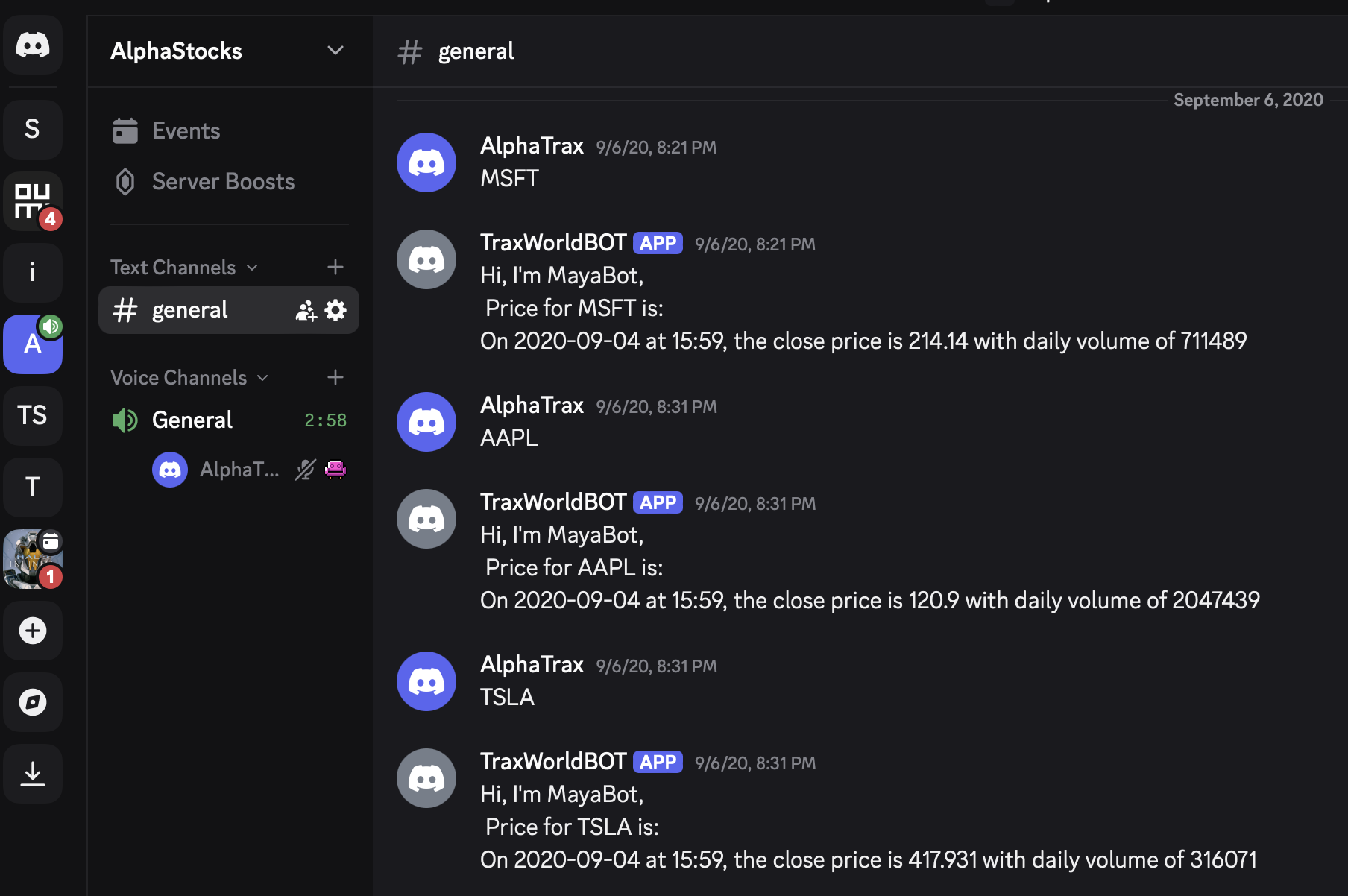

This path to doing more with my foundational engineering knowledge led me to discover Generative Pre-trained Transformer (GPT 2.0 to be precise) when I was building a stock chat bot in the year 2020 right after completing my Masters in Technology with research topic 'Enhancing Cybersecurity with Artificial Intelligence and Automation'. Right from the go, I was blown away by the discovery of something so powerful and followed up seeking adminision into early access to GPT at OpenAI. I also rapidly enrolled for Andrew Ng free courses at the time at Coursera during the Covid years. I spent those isolated years leveling up my understanding of Machine Learning at Neural Networks.

Below is the rudimentary version of that stock chatbot dated 2020 before I began the search for semantic and natural language chatbot:

Fast forward to OpenAI taking GPT3 public in 2022 and with my prior experience in ML, I was ready to embank on the next trail, and from my early awareness of GPT, it was clear the possiblity were clear to me even to, especially with feature engineering which was always the sticking block for unsupervised training.

But by the time GPT3 stole the public's imagination the world over, I took a two year AI winter haitus to study MBA in Technology Management at the Open University while keeping a firm eye on development of GPTs, especially under the hood of the innovation by reading whitepapers and anything that revealed the deeper magic behind the curtains of transformers.

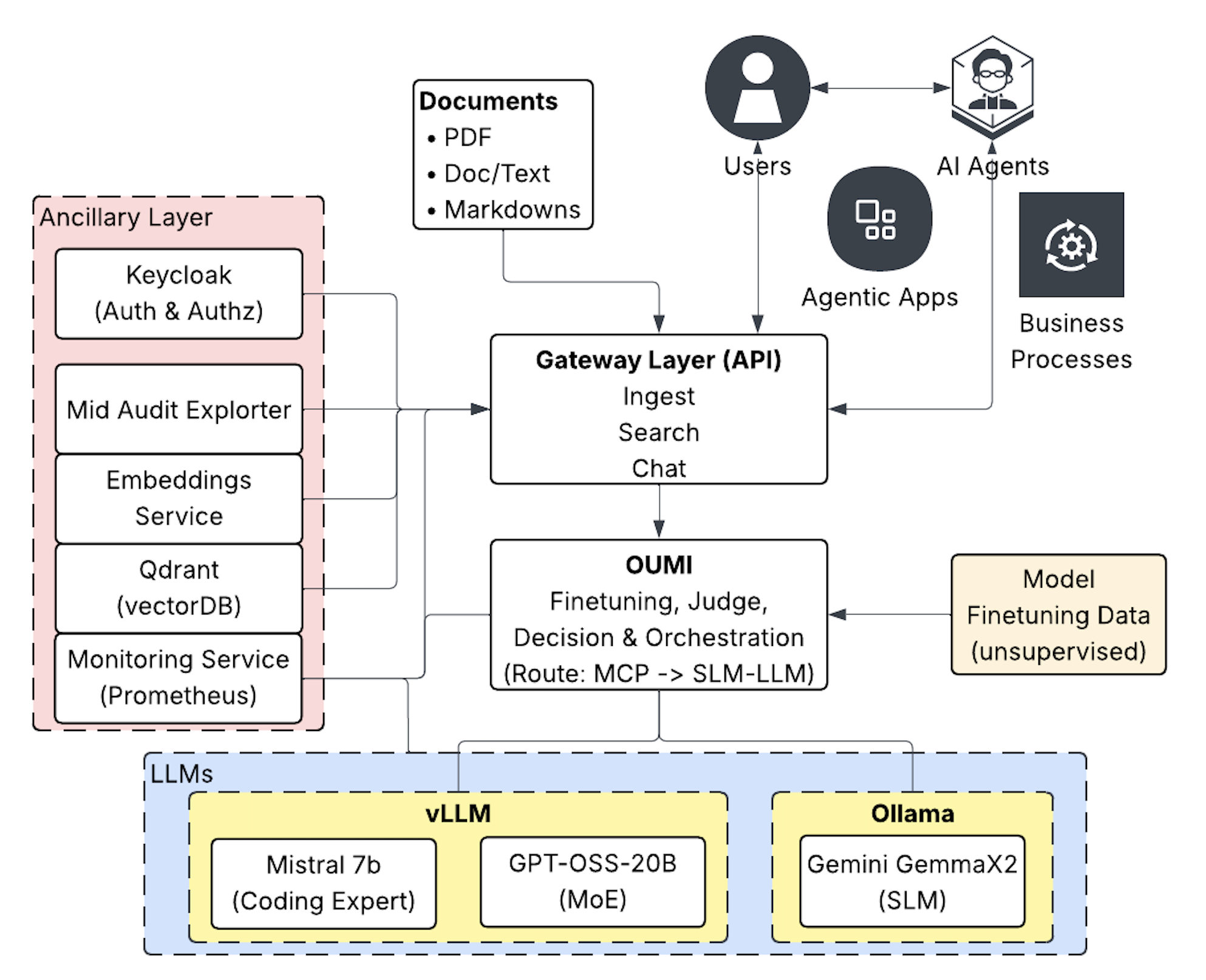

I eventually decided to the deeper engineering track into building LLM infrastructure in July 2025 with the idea of solving two key challenges:

- Provide provide LLM to organizations that have high sentitivity to about data privacy.

- Lower the adoption barrier for organizations that can directly benefit from LLM

In addition to those two objectives, the private AI infrastructure will enable organizations to begin the AI-Native transformation journey to optimize their workflows.

In addition to those two objectives, the private AI infrastructure will enable organizations to begin the AI-Native transformation journey to optimize their workflows.

As this journey continues, I will use this blog to demonstrate what is possible with a OneManArmy that is brought to you in part by "Mixture of Experts".